The newest prestigious platform for developers. Why is Unreal Engine 5 so good and why is it the perfect match for the power of Mosaic 360º mobile mapping cameras?

Unreal Engine – What is it?

Unreal Engine, in its most simplistic form, is a highly in-depth platform for game developers and filmmakers to create 3D content in real-time.

It’s an entire engine where the creation of cities, landscapes, and people can be fine-tuned to minute detail.

We’re talking from splinters of wood ricocheting off the swinging doors of a western saloon to tiny daisies growing on the banks of a woodland stream, then right down to the unusually flared nostrils you give that creepy character you purchase potions from.

In other words, it has all the pre-designed components to develop an entire customized world for you to make as weird and as wonderful as you like.

Limitless worlds, actually…how far does your imagination stretch?

Unreal Engine is renowned in the entertainment industry as a highly prestigious mechanism, built with extensive programs and tools to assemble your own game. Top tier stuff.

A quick bit of background.

Photo by Brett Jordan on Unsplash

The first engine was developed in the 80s, with each new expansion bringing newfangled capabilities and fresh technology to the market. Every release with a polished and refined upgrade – all whilst being entirely code-free. Global game developers and the public alike thought Unreal Engine 4 in 1998 was spectacular – and it was.

Until Unreal Engine 5 came along.

Why is Unreal Engine 5 so good?

Unleashed in April of this year (2022), it has taken the industry by storm. No wonder why – the level of detail is insane.

Here we talk about each new feature and describe just how groundbreaking they are.

Let’s break it down section by section. First up, ‘Aziz, light!’

Lumen

This global illumination solution incorporates bounce lighting, and the scale of this tech ranges from kilometers to mere millimeters. You can control the visuals of a slow reveal of a deep, glowing sunset across the Serengeti to the number of rats scurrying across the puddles in a dingy New York alleyway.

Think just a wisp of light glinting off that wiry fur – this is the detail per scale that is available.

NOT ONLY THAT.

Lumen is an entirely reactive system, meaning whatever you adjust – the light is reactive to the move. If you move a chair in a brightly lit house, the shadow will move appropriately with it. Same with a shard of torchlight beaming through a rickety fence, the amount of light available on the other side adjusts accordingly accounting for each gap in said fence. Clever right?

The creators explain it perfectly:

‘The system renders diffuse interreflection with infinite bounces and indirect specular reflections in huge, detailed environments.’ – www.unrealengine.com

How was this done before Lumen?

Previously, a method called path tracing was used to reproduce light, and path tracing is a light ray tracing algorithm. This algorithm sends rays from the light source in a video game, such as the sun, to a reflective surface, let’s say a polished floor, and governs how much of the light should realistically appear from the player’s viewpoint.

Image by Lennart Demes from Pixabay

This algorithm is integrated into each pixel for the output image – the image that you see with realistic light and reflection. This method however was rather slow due to the number of pixels per frame, which in many cases, was quite a lot.

So it needed a little (or a lot) of time to render and sharpen up. Because of that, the initial output was rather noisy, making for a grainer image.

In video games, between 30 – 100 frames are needed to render every second.

So, as developers didn’t want their players to play through a grainy pixel hailstorm they ‘faked’ the lighting in a process aptly named ‘light-faking’.

This element pre-rendered the expected light by inputting the path tracers beforehand, which then eliminated the rendering wait time. So over the original frame would lay the invisible pre-rendered ‘lit’ frame.

In this format, a light flicks on in a game, and the room is instantly illuminated.

However, there is a catch. Global fake illumination is not in real-time.

As an example let’s take a nice shiny kitchen. Before you enter the kitchen it is dark, you flick the light on and instantly you see the gleaming surfaces, the knives hanging on the wall, and a collection of pots on the counter. Business as usual in the kitchen.

But then, you wander over to the counter and pick up a large pot. A large pot that is casting showers across the counter, and move it over to the other side of the room. The shadow, most unfortunately and unrealistically, remains because the light and dark feature has already been determined in the pre-rendered frame.

Light faking was instant but lacked accuracy and to resolve that each element/movement/shadow had to be pre-determined to be in sync, and you can quite imagine how time-consuming that was.

Lumen is exceptional in that it delivers instant, real-time lighting. Without all that wait.

So that pot you just picked up, the shadow will disappear, and a relevant one reappears when placed on the other counter.

Unextraordinary magic.

This is game-changing in so many ways, and so is the next feature that we are going to explore.

Nanite

Nanite is an entire geometry system that allows developers to construct games with incredible detail. Film-quality art composed of millions of polygons can be imported, replicated, and scaled – all whilst keeping that real-time frame and vision.

Let’s go back a step.

CGI imagery is exquisite, especially now designers have pushed the boundaries of reality and literally, ANYTHING can be created. Dinosaurs, dragons, galaxies. Under each roaring T-Rex and rippling dragon scale is a flat plain of geometric shapes called polygons. The more polygons, the more detailed the CGI, but the more polygons an item or landscape has, the longer it takes to render.

Photo by Frances Gunn on Unsplash

Usually, games use LOD (Level of Detail) meaning that the further away an object is in a frame, the less detail it has, so the fewer polygons it will use- therefore the quicker to render it will be.

Nanite is groundbreaking in the same way that Lumen is. Features in the Unreal Engine 5 Nanite system have millions of polygons without the use of LOD’s.

New technology coupled with the Quixel Megascans library provides film-quality visuals using polygons in the hundreds of millions to create vast detail.

Each item is moveable, scalable, and perfectly rendered. This brings us nicely to where these items come from.

Megascan Bridge

Image by Mrexentric from Pixabay

Megascans is a library of 16,000 objects. Trees, rocks, material surface – a big cupboard of ingredients for you to build with. All hyper-realistic because each image was captured by a real person in real life.

Thousands of different objects were scanned one by one, to structure a library for you to use and play with. Each item was picked and scanned individually, from a multitude of different locations around the world, amazing!

This is where we come in.

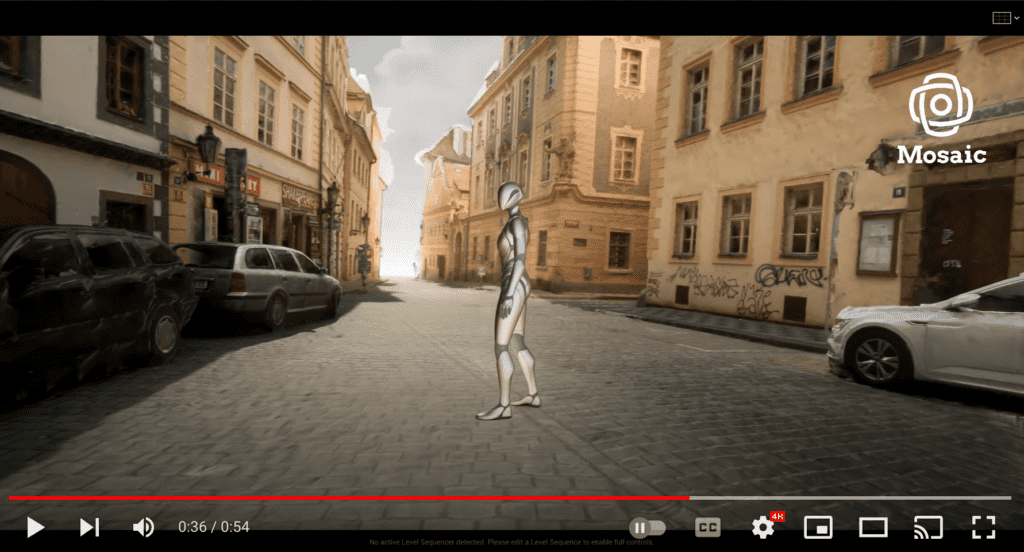

Mosaic’s mobile mapping cameras and Unreal Engine 5

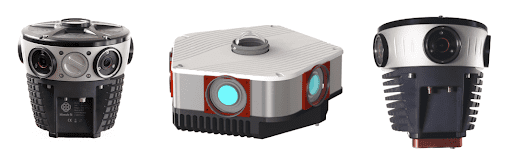

Our 12k, 13k, and 22k resolution cameras can collect environment footage and store it for later use in impeccably high quality, adding to the realism and intensity of a game.

For a 360° landscape mode to snap rugged terrain, Mosaic X is an AI-compatible, 13k resolution camera made for mobile observation and content capture.

It is waterproof, dustproof, and tackles hot temperatures with the vigor of an Englishman in Benidorm. This camera will shoot HD imagery of such a standard to form true realism when converted and immersed in a video game.

Check out a more detailed spec here listing the product features and capabilities.

Once the images are collected by the sourcing team, a program called Reality Capture uploads and transforms the content into 3D objects in the engine. It does this through the use of photogrammetry.

The process of photogrammetry involves snapping many overlapping images of a structure, or environment, and then melding them together to form a digital 3D model.

This can also be applied to entire landscapes. We have a high-caliber 22k resolution camera that captures content and maps entire cities in perfect resolution then generates 3D models from it.

Here is a great video detailing more.

So that’s the scenery covered, what about the inhabitants?

MetaHuman

MetaHuman is a hyper-realistic model to configure people in every way imaginable. Remember those nostrils? With Unreal Engine 5, characters can be produced in mere minutes.

How?

Photo by Anton Palmqvist on Unsplash

The data for these images are actually taken from real-life scans, giving true realism. MetaHuman stores a huge selection of skin tones, hairstyles and shades, body stances and movements.

You may have heard of the term Mesh.

Mesh is the name given to the MetaHuman plugin where you can create YOU- without having a mirror propped up next to the laptop.

Ok, we’ve come a long way from that.

Mesh does however take the traditional scanning and modeling tools and boost them with landmark tracking. Meaning, taking your recognizable facial and bodily features and placing them over a framework built into the engine.

MetaHuman enmeshment.

Image by Gerd Altmann from Pixabay

The bodily features further enhance this, and you can play around with how they stand and walk. These characters have also been modeled with pre-emptive movement, adorably titled ‘active ragdoll physics’ means that your MetaHuman will even try to protect their head if they accidentally trip over.

The realism of this feature has to be seen to be believed.

One major element that players and developers around the world questioned.. what about everything designed in the previous platform, Unreal Engine 4? Would all that work and all those worlds go to waste?

Great news! Those projects you made, with all their characters, are easily transfigured into the new Unreal 5 engine.

So sit back and watch your projects blossom within the new features of Lumen and Nanite.

So, what does this all mean?

What’s to be gained from Unreal Engine 5?

Literally everything.

It’s faster, sharper, and more efficient. Using its cutting-edge technology, it brings graphics and game development to the very brink of reality.

The realism of its features relies on the HD quality content that is initially captured in the real world, in order to be translated into the fantasy one.

Our 360-degree mobile mapping camera can help bring this to life.

‘At Mosaic we are pushing the limits of HD mapping; our mission is to build a better view of the world’ – and a better vision of the worlds you create online.