*** UPDATED AUGUST 2023 – Scroll to the bottom of the article for more videos highlighting the differences and our latest NeRF creation.

How to Create Realistic 3D Models with 2 highly-advanced technologies: NeRF vs Photogrammetry explained here.

Realistic 360° Imaging

Realistic 360° imaging is key to creating 3D models, and one process uses 360° imagery in collaboration with artificial intelligence. Photogrammetry is one method, and NeRF is another.

Here we compare NeRF vs photogrammetry – what each process is used for, and discuss their potential implications on humanity’s existence.

Dramatic entrance established, let’s explain what photogrammetry and NeRF actually do.

What is Photogrammetry?

Photogrammetry is the technique (or art, depending on who you are talking to) of taking multiple overlapping photographs of an object or environment. Those images are then processed via specialist technology to create realistic 3D models or maps.

The collection of 2D images taken from a 360° angle is then seamlessly combined to form a 3D result. This is achieved by constructing a 3D mesh based on a series of rapid still images, photographed at several frames per second.

A light overview of the standard steps in photogrammetry are as follows:

How is Photogrammetry Done?

Image Capture

A high-resolution camera will obtain better results, clarity from the beginning ensures a sharper end result, and the overlapping of images is crucial here. It is recommended between 20-250 images be taken, but this of course will vary depending on the size of your object.

The photos are snapped successively in a circle around the item, from top to bottom, with an overlap of about 50%. For more detailed areas, additional photographs are taken to ensure full coverage.

There are guidelines on what works well and what does not when it comes to capturing images.

- The object needs to remain stationary

- Lighting needs to be adequate and consistent

- The image should primarily be the object, as close to and in as sharp focus as possible

- The background that the object is placed on should have a good color contrast

- Shiny items do not photograph well due to reflections, the same applies to transparent objects. This can be altered once uploaded but generally, a matte surface is best

Upload

Once the images have been captured they then need to be run through the software and stored in a library. The images will go through quality assurance to check their suitability and ensure the guidelines mentioned above are met. Photos can also be edited here if only a small tweak is required, to avoid reshooting the object completely.

About halfway down this page is a great comparison of different software available, from online tools to mobile apps, ranking quality, features, and price.

Composition

The software will do most of the work regarding the actual composition and will automatically build the 3D model. It is often a case of dragging and dropping images and selecting a few options.

There are, however, additional features that enable the user to alter the set of 360° imagery before the process is completed.

- Image Matching – this saves an image of the overlapped photographs

- Feature Extraction – takes recognizable features from multiple images

- Delighting (as in the removal of light, not feeling delighted) this estimates the depth of an object by playing with light and shadow to even out the object’s surface

The comparison software above further explains these features and many more.

Post-Processing

So, you’ve tweaked the photographs as required, and the program has generated the 3D model so now you can see it in its resplendent glory.

The 3D model is built on a mesh. This mesh is a structure consisting of polygons that define the height, width, and depth.

It is rarely a case however that the model is now immediately ready for use.

The model may need resizing or reorienting. Imperfections may need fixing, and mesh cleanup will be required. Cleanup is needed when the polygons are visible, leading to uneven, noisy images. Many tools will have this software built in to cover your post-processing.

The file is then saved and it is ready to go.

Who uses Photogrammetry?

3D models created from realistic 360° images are incredibly useful in numerous interesting sectors:

Engineering, Architecture & Design

Perhaps one of the more expected areas. Engineers and designers use photogrammetry to form models to help them to fit parts to an existing item, but also to reverse-engineer entire structures. Visualization, site planning, and the monitoring of construction are aided by photogrammetry for architects.

Healthcare

Medical professionals use this technology when fitting a prosthesis, in dentistry, and when reshaping the skull or face of a patient. For babies requiring a helmet, for example, photogrammetry is an effective way to examine and monitor results. However, it can be sometimes difficult to keep the patient sitting still as required.

Arts & Culture

Photogrammetry allows for a new dimension for artists and photographers. Curators of museums can create virtual collections to showcase to the public and cultural heritage landmarks can be virtually reconstructed and preserved.

Game Development

Developers use photogrammetry to aid in the design of game environments and objects. Here you can read more about how realistic 360° imagery is used in game development.

Forensic Research

The police can reconstruct virtual crime scenes to gain insight into open cases, and also create virtual training environments.

Archeologists & Cartographers

Undiscovered parts of the world can be mapped out from the land to the ocean. Discovered areas can be mapped significantly quicker using photogrammetry, Google Earth also uses this process to create 3D imagery.

Plus you, should you wish to own a tiny little 3D printed figurine of yourself.

So that’s a nice overview of photogrammetry and how it relates to realistic 360° imagery, so what’s NeRF?

What is NeRF?

NeRF stands for Neural Radiance Field.

NeRFs use neural networks to represent and render realistic 3D scenes based on an input collection of 2D images.

Nvidia – one of the leaders in NeRF technology

But Mosaic CEO hesitates with this simple definition and instead offers this one:

It’s a novel view or a series of novel views (aka a video) derived from a discrete set of images of a scene. And the closer the novel views are to the original images the more believable it looks.

Jeffrey Martin

Considering how young the technology is, it is sure to cause some debate about the way to define it and explain it in a way that people can easily understand.

NeRF, like photogrammetry, is used to create realistic scenes, and it is also ‘designed to generate volumetric representations of a scene, and render high-resolution photorealistic novel views of real objects and scenery.’

The processing takes the user’s images, and using artificial intelligence, fills any ‘gaps’ with its presumptions based on the initial image input, then blends them to render a complete scene.

So where photogrammetry requires overlapping imagery from every angle, NeRF can be a little less rigid as it has the learned intelligence to work out any gaps itself.

This is exceptionally groundbreaking, and the speed and potential outstrip photogrammetry, which was already fairly impressive. In the race between NeRF vs photogrammetry, NeRF is sailing ahead.

Nvidia Corporation

Nvidia is a global leader in artificial intelligence software. An American company set up in the early ’90s, they are as of August 2022, worth over $390 billion.

The new software Nvidia Instant NeRF was released earlier this year.

AI is turning 2D images into 3D scenes in seconds

Nvidia

How is NeRF Done?

It is a neural rendering framework that learns a 3D scene in seconds and renders that scene in mere milliseconds.

The process follows the same fundamentals as photogrammetry, beginning with overlapping high-resolution imagery or recordings. However, NeRF vs photogrammetry, doesn’t require every image to be 50% overlapped. So while overlaps are still needed, AI helps out by filling in the little gaps left behind.

It then gets a little technical and a full layout of getting started with NeRF is explained well here.

When the images’ positions are prepared for your first Instant NeRF, launch the graphical user interface through Anaconda using the included Testbed.exe file compiled from the codebase. The NeRF automatically starts training your NeRF.

You will find a majority of visual quality gained in the first 30 seconds; however, your NeRF will continue to improve over several minutes. The loss graph in the GUI eventually flattens out and you can stop the training to improve your viewer’s framerate.

Getting Started with NVIDIA Instant NeRFs by Jonathan Stephens

Animations can then be saved and edited, and unlike with photogrammetry the entire background here is also captured, opening possibilities to envision the world in new ways, and at great speed.

Who Uses NeRF?

NeRF will be used in the same fields as listed above, but given the accessibility (as just about everyone owns a smartphone and with software becoming cheaper with market expansion) the process is not only limited to professionals.

The opportunity to capture memories and significant moments, forever stored in 3D is quite as unnerving as it is exciting.

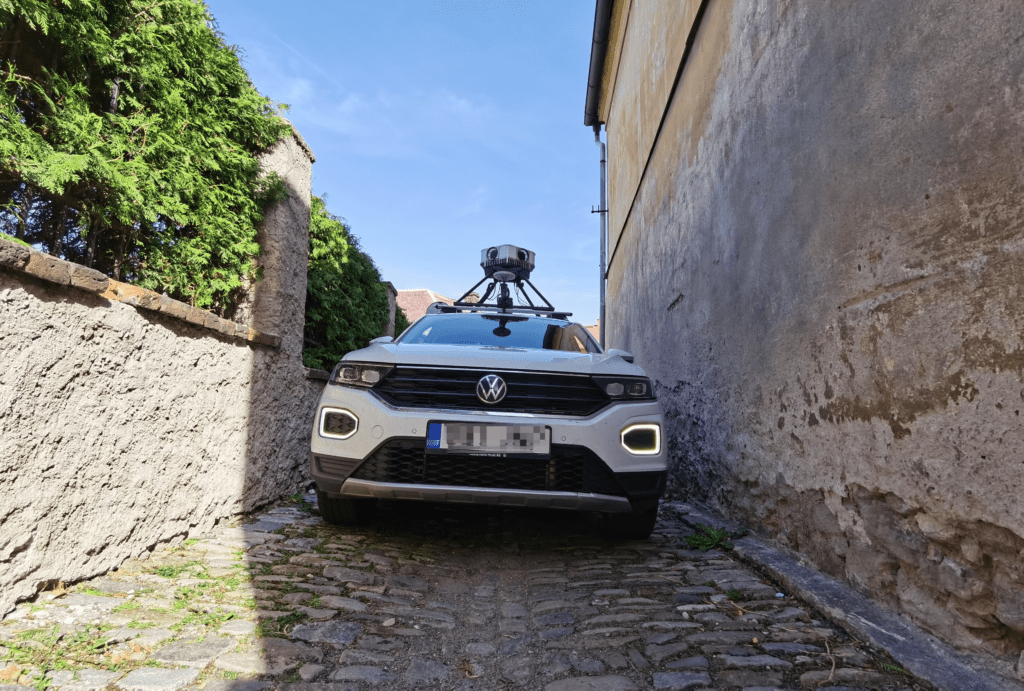

Here at Mosaic, we’ve been running our own tests and it seems to work very well with our own cameras as well.

Some of our customers who are looking to present their worlds in a visually appealing way may find this helpful. For the VFX and movie industries, they just want things to look good and lit correctly. They need the view to match the images, rather than a traditional 3d model from photogrammetry.

Jeffrey Martin

NeRF vs Photogrammetry with Mosaic Viking Camera Data

Check out the difference between the two methods in question. We used data from the same test drives to process and create a NERF model and photogrammetric 3D model.

Our first attempt at NeRF: (August 2022)

Data processed with our photogrammetry pipeline:

Latest attempt with NeRF: (August 2023)

NeRF vs Photogrammetry – and the Winner is..

Photogrammetry gets great results and a simple setup of only a smartphone and a mobile app can do just that. Of course, for individuals starting out the results will depend on practice with both the 360° imagery capture, and the post-processing in the application, and will likely not be the perfect 3D model on the first try.

Instant NeRF is faster, more intelligent, and a big step up in terms of process efficiency.

So in the NeRF vs photogrammetry comparison – NeRF is the clear winner!

Conclusion

It’s an interesting thing, technology. It gets faster and faster and its integration into every day grows deeper and more rooted.

This kind of accessibility opens many doors. Take imagery. We moved from flipping through photo albums at our grandparent’s house then leaped to airdropping images from an iPhone. Now, immaculately shot and filtered video recordings with background music.

In a few years to come, it could be an entire virtual realm that families can step into and experience almost firsthand, using Instant NeRF technology.

This kind of tech can be very useful for everyday people. Tenants could view houses and flats from their own homes, estate agents could book viewings back to back for prospective buyers to ‘walk’ around.

Google Maps could push it even further and not limit the virtual visits just to famous landmarks, but open up entire cities.

Then think of the possibilities for archived footage of defining moments in history to be brought to life – iconic moments in sport, televised speeches of great political leaders, symbolic protests, oppressions and uprisings. These are some beautiful and terrible events that people could experience and truly learn from.

There is a darker side. False or entirely fabricated news stories and photoshopped images circle the globe by the thousands, if not millions, every single day.

Synthetic media like deep fakes are widely shared for their clever and amusing content, and whilst Jerry Seinfeld cameoing in Pulp Fiction is harmless fun, politicians are also targeted. Due to the sheer amount of footage available online, it’s not difficult for creators to mimic and fabricate entire public announcements.

Check out this article we posted a while back about the importance of geospatial technology and data in the onslaught of the war in Ukraine.

Currently, the examples online are mostly for amusement. A darker example however was a recent poorly executed deep fake of the Ukrainian president. While clearly false, it displays unmistakable malicious intent.

With the accessibility to create entirely realistic 360° scenes with NeRF technology, it drives those with a lack of trust in digital footage ever more suspicious. Concerns of not only fake news, already proven for potential huge consequences, but also identify theft, framing, or blackmail. This is where NeRF vs photogrammetry are poles apart.

Stephen Hawking said that the colonization of other planets is a necessity for the continuation of human existence. Reasons such as climate change, war, or an incurable virus would drive us out.

But what if he didn’t mean it in the physical sense? What if the virtual world is the next intended step for humans? The Metaverse is a not so far-off model already in the making.

NeRF and photogrammetry are not a seemingly wild truth that means this will happen. But it is certainly a step closer to the possibility.

Where we see the benefits, implications, and uses of NeRF:

NeRF is a very new technology but the results that people are posting look very intriguing because it handles a few of the shortcomings of photogrammetry such as the difficulty to render shiny and transparent objects.

It’s a completely different technology than photogrammetry and solves things that no one has been able to solve before.

As I understand it, it solves the light’s behavior which is why it is able to handle shiny and transparent objects such as windows and glass.

NeRF is producing some pleasant looking results. So for applications which don’t need precise measurements but which need something visually pleasing, such as VFX or games, there are a lot of exciting progress ahead and potential use cases.

Jeffrey Martin

Exciting times we’re in huh? Want to stay informed about our progress with NeRF vs Photogrammetry? Stay connected by subscribing for more content about the innovative worlds of mobile mapping, photogrammetry and so much more!